Senior Thesis Project

Shopping Stories started as a Senior Design Thesis Project for my undergraduate degree at the University of Central Florida. The director of History Revealed, Inc., a historical research-based non-profit, presented her idea of aggregating all of their existing research together into a centralized database. The initial scope was merely taking the gigabytes worth of data stored in Excel files and throwing it all into a database.

After the team was picked, myself and 4 other students, we divied out roles. I took on the role of Project Manager, where my job was to relay information and tasks from our sponsor to my team, as well as set up weekly meetings, manage weekly sprints, and monitor the progress the team was making. The total duration of the project was around 8 months.

For the project management aspect, I set up the Gitlab repository, a Jira Kanban board, weekly Zoom meetings with our sponsor, a Discord channel for the group, Gantt charts, and and utilized various other tools to help us achieve the task ahead of us.

After a few weeks of research and many talks with our sponsor, we decided to expand on her original scope to include a fully functional website that served as the home for her new database, as well as a way for her non-profit's supporters to learn more about the project and the research involved.

Our final solution stack, or tech stack, was what we called 'NEVN'. Neo4j, the graphical database, which would allow us to make meaningful relationships from the complicated data.

ExpressJS for the backend that would serve the website.

VueJS for the frontend, and utilize the Vuetify plugin.

Then NodeJS for bringing everything together during development and production.

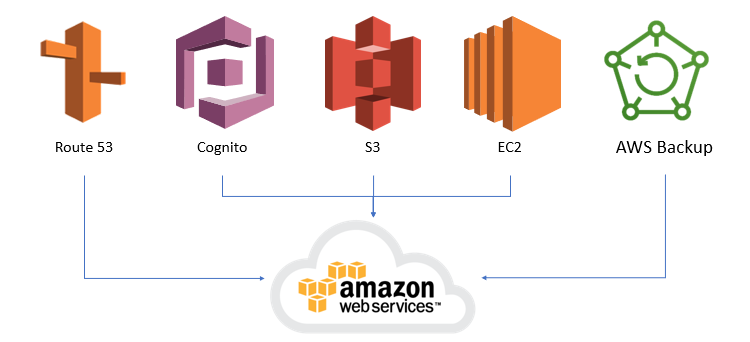

We decided to use Amazon Web Services for deployment due to the credit system in place for non-profits. This allowed the team to work with awesome tools such as EC2, S3, Route53, and more.

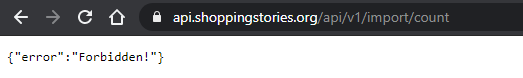

My job as Project Manager meant I touched nearly every aspect of this project. But I primarily dealt with creating the entire backend that served the website, as well as setting up the deployment on AWS. For the backend, the most important part was making sure that the API was secure. I set up JWT's for privileged access, and the API would kick off anyone that did not have the proper requirements to use the API.

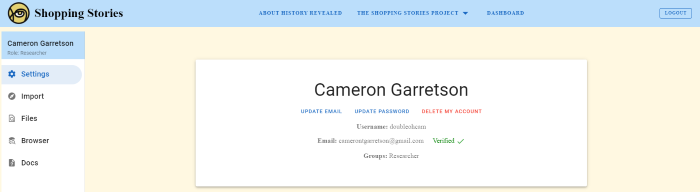

The API served the website in many ways. It was divided between the user management aspect, where users were able to edit their attributes, login, register a new account, forgot password feature, and more. I also essentially implemented an abstraction of the AWS Cognito user management console for our sponsor for her account alone. She would be able to edit all of the users, add them into groups like her research team where they would then have more access to features on the website, as well as delete users, update emails and passwords, etc.

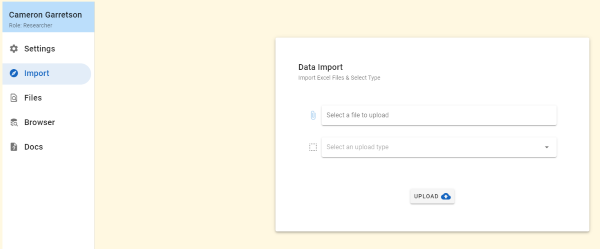

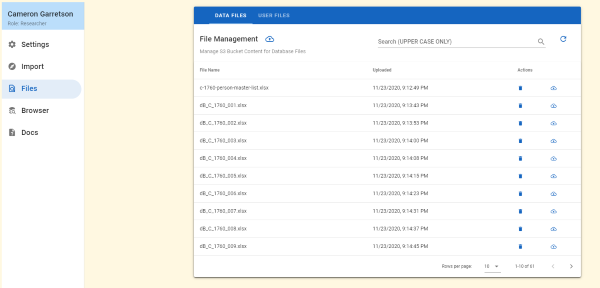

Another important aspect of the API was to take an Excel file a user uploads to the website, parse it, and input the data into the database. The Excel file would first be uploaded into a dedicated S3 bucket, where it will then be interpreted by the backend. First it is parsed into a JSON file, then parsed into a Cypher query for the Neo4j database to understand. This allowed our sponsor to continually add data to the database in the future.

The backend had more features as well, such as allowing users to download files stored in various S3 buckets, a process which involved converting the Excel files into blobs so that they could be sent through the API calls. The backend also populated data tables using the data found in the Neo4j database, as well as information of specific users found in AWS Cognito User Pools.

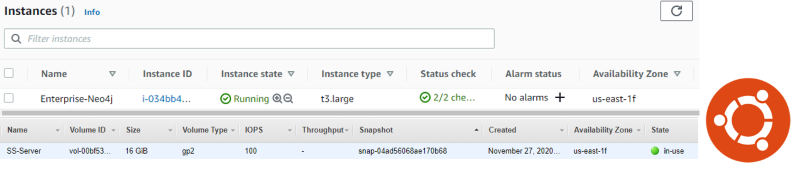

I also helped in deploying the project on AWS. We used an EC2 Ubuntu instance, which allowed us to run various scripts that would keep the website running in a healthy manner. These scripts would also automatically update SSL/TLS certificates that may have expired, without our sponsor ever having to touch it - which was important as History Revealed, Inc. did not have a dedicated IT team. We used Let's Encrypt's Certbot for the certification.

We also utilized many different subdomains. One for the Neo4j browser, which was a UI abstraction of the database that allowed our sponsor to use the database directly. We also had a subdomain for the documentation, which holds information regarding every aspect of this project.

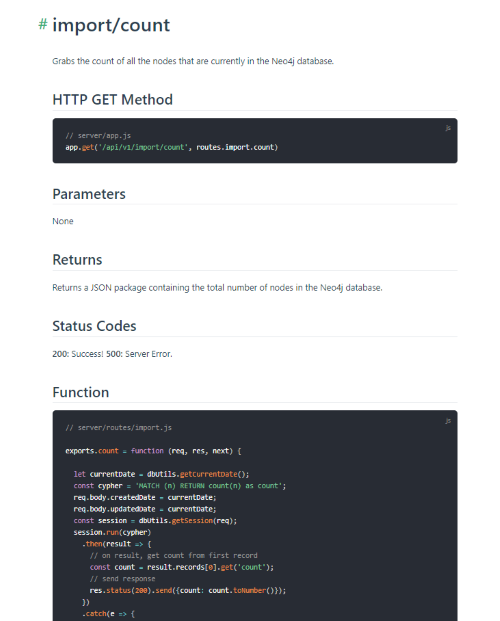

The idea was for future teams to quickly catch up to speed and expand on our work on this project. For instance, all of the API, the status codes, returns, etc are available on the documentation that lives on a VuePress website using an S3 bucket.

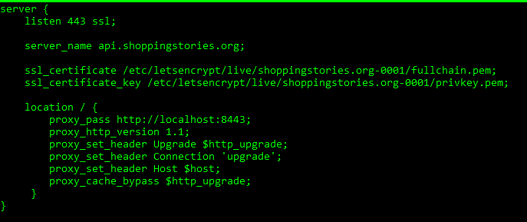

Finally, I also utilized an NGINX proxy server that grabbed all HTTP requests and redirected them to the encrypted and secured HTTPS channels for the website, API, and Neo4j browser. What a great tool!

In the end, we presented this at the end of the year to a committee of professors in our major, Computer Science. We did extremely well in the presentation, having impressed our professors by the level of professionalism involved, the effort put in, and the VuePress documentation particularly. Most importantly, our sponsor said she was proud of our achievements.

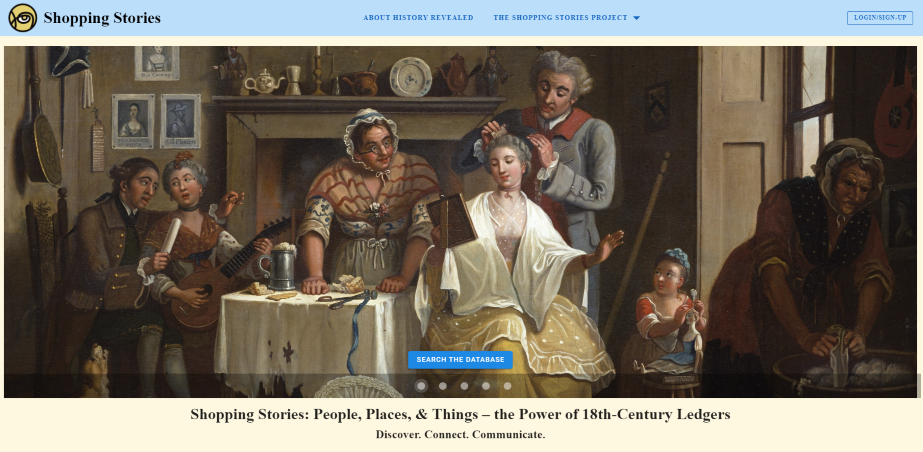

You can view the Shopping Stories website here!